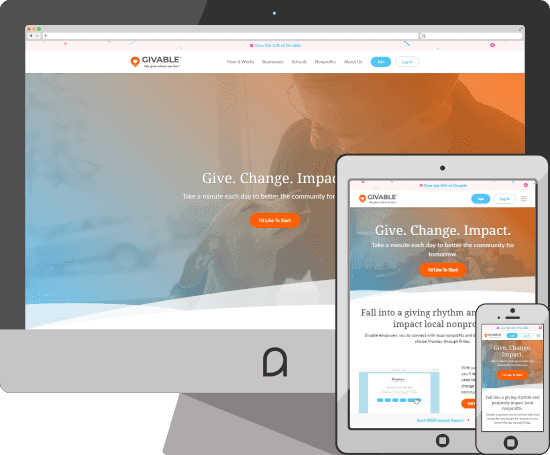

Givable

Givable is a website for grouped donations. I was tasked with rebuilding the frontend which uses components of Bootstrap. I also was tasked with adjusting existing features in the backend using Pyton, Django, and Flask. Checkout this beautiful creation and the neat web style guide.